Quote:

Debugging is twice as hard as writing the code in the first place. Therefore, if you write the code as cleverly as possible, you are, by definition, not smart enough to debug it.

Single click and double click

Together with the move to Vista, I decided to use single click to open items. It makes no sense to a computer-illiterate person why some items require a single click and some require a double-click. Us old folks just know which is which. In fact, this is what I tell my father: single click first. If it doesn't work, double click.

No more. I'm going to see if single-clicking works for me. So far, I seem to be coping well. I just need to remember not to click on the files "just for fun", especially in Explorer!

Using Vista

I got a new company notebook to replace my old SOTA 2. It came with Vista Enterprise. My first thought was to install XP on it, but I decided to give Vista a try. After all, we need to keep up with the times.

The notebook comes with 2GB RAM and a 75GB HD. I lost 6GB right away as it is used to contain the re-image files. The space is difficult to reclaim as the partition is in the front.

I wanted to have two partitions, but it was very difficult to do so using the company's installation disk. It wipes the disk clean. The only way is to install the standalone Vista. I don't want to go that route for now. (I did it for Windows XP for total control.)

Vista has a built-in partition manager, but it is not smart enough to move files to shrink the partition. The drive has 50GB free space after installation, but Vista could only free up 30GB. In the end, I decided to live with just one partition. If it works well, I may use just one partition from now onwards.

Unlike the past, I decided not to turn off all special effects. I turned most of them off, especially sliding and shadows, but I left the window effects alone. There are two special effects that I dislike, but I couldn't turn them off. The first is the shadows around the windows. The second is smooth scrolling.

I feel the so-called Aero look is over-rated. It does not have enough transparency effect. I would love a true transparent background for Explorer.

Ultimately, it took a few days to get used to Vista. It is quite similar to XP, but require more clicks to find and access the advanced functionality. It tries to be simple for the consumer by looking cleaner. It reminds me of OS-X.

Vista is slower than XP, that's for sure. With 2GB RAM, it accesses the HD more often than expected. In fact, it access the HD seemingly randomly, even when it should be idling. It takes a long time to boot and log in. Opening files and starting apps have a small but noticeable lag.

Vista now has simple revision history for files. I use revision control for my source code. It's indispensable. Let's see how it works for normal files.

Two things broke out-of-box: scandisk and defrag. Scandisk refused to run because the disk was in use. That's dumb. Defrag is dumbed down and is very slow. Defrag also now defrags to 64MB fragments only. I don't mind that; it is usually good enough. It's too slow to be useful. I downloaded a free third-party defrag tool.

Also, Vista shows a blank screen when it starts to hibernate. I'm not used to that.

Why need new drivers?

Windows 3.0, Windows 3.1, Windows 95/98, Windows 2000/XP, Vista/7, they require different drivers for the same hardware. Why?

From Windows 2000 onwards, Windows should allow drivers to run in legacy mode (with full functionality). We can expect a device to have a driver for the OS of its era, but it is pretty unusual to have a driver for a new OS.

Microsoft is pretty good for backward compatibility for apps, but not so for drivers.

Why some OSs don't become widely used

Windows owns 90% of the market today. It started with Windows 3.0, but Windows 95 was the one that took the world by storm. Since then, it is generally assumed that every Windows version is successful. It is not.

Windows 2000 had high hardware requirements (CPU, graphics, memory, HD) and required new drivers. Many older PCs couldn't use it. Many older devices didn't have the drivers. We remember Windows 2000 today as a lean OS, an irony.

Windows XP uses the same drivers as Windows 2000, so the transition was pretty painless. It has higher HW requirements, but these can be solved. No drivers, especially for the graphics card, means you can't use the OS at all.

Most consumers never upgrade to 2000 (it wasn't marketed to them anyway), but directly from Windows 98. By then, the HW was fast enough and there were enough drivers. XP wasn't without its problems when it came out. They have been forgotten with the passage of time — XP came out in Oct 2001.

Windows Vista uses a new driver model, so many older PCs couldn't be upgraded. Today, after two years, it has just 23% market share. (Vista came out in Nov 2006.)

The next version of Windows, Windows 7, will use the same drivers as Vista, so I foresee it will gain market share pretty fast. The situation is just like 2000-XP. Vista is like 2000, and Windows 7 is like XP.

I appear in Google search!

I was browsing absently minded for Princess Mononoke analysis and suddenly I noticed a webpage of mine appear as the 261th entry! Wow! (That's on the 27th page.)

Of course, it's very rare for someone to search past the first five pages. I usually adopt two rules to stop searching: stop after x good links, or stop after x pages of the last good link.

I need a new backup strategy

I'm using the most primitive backup strategy ever: copying the folders manually to my backup HD. It works, but it uses a lot of space. Using a backup HD is much easier than writing to CDR (only 650 MB at a time) — I didn't have access to a DVD-burner until recently.

I still don't think I'll use a real backup app, but I may start to use SyncToy for Windows XP. This is even more useful than backup because I need to keep my folders consistent across computers. Again, right now I'm doing it manually.

This is also a good time to reorganize my folder structure. With folder sync, I should define the same folder structure for all my PCs, including the backup HD, and place the files in the correct place immediately (rather than the download folder, for example).

It is also time to decide whether to delete some old installation packages. Apps are usually not a problem because they are small. I usually keep two versions: a tested one and a just downloaded one. I'm questioning the need to keep Win2k. Also, I have WinXP, SP2 and SP3! Is there a need to keep SP2 around?

End of the road (SOTA 2)

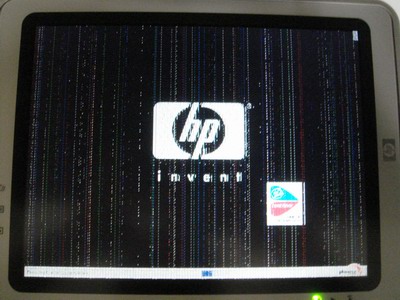

The hard disk gave a loud click when I turned on the tablet PC. I knew it was game over.

The good news is that I last backed up just a few days ago, so I didn't lose much data.

The bad news is that I only backed up my most commonly used folders. My last comprehensive backup was months back, and it wasn't 100% comprehensive.

I don't care about losing data that I can download again. However, I may have lost some pictures that I haven't transferred to my backup HD. Well, I can't remember what they are, so they must not be very important.

The tablet PC is on its deathbed already. Even though it'll still work if I replace the HD, I don't really want to struggle with it anymore:

I've taken it apart to see what's wrong, but I couldn't find any smoking gun.

Using JS to overcome difficult CSS problems

It is very hard to align a non-table element vertically using pure CSS. I don't bother now; I just use JS to move the element.

In fact, I'm thinking of using JS to achieve equal-height columns. Using pure CSS to do it requires very obfuscated markup.

Despite the hype of using liquid layouts to cater from handheld (400px) to super-wide screens (2000px), a particular layout is usually optimal within a much smaller range. For now, I hook onto the window.resize event and change the stylesheet for small screens (<800px).

(There are some advanced CSS selectors that will help. They don't work in IE, of course.)

Many web designers like to use pure HTML/CSS. However, I don't mind using some JS magic if it is the simpler solution.

Why still need printer drivers?

I don't see why printer drivers are still necessary in this era and time. Why is a printer language needed? Why can't a printer use JPEG/PNG as its native input formats?

If you want people to print, you got to make it easy to do so.

The first thing is to make it easy to hook up to any devices. For non one-to-one connections (ie over network), the printer must be easy to find and connect.

The second thing is to make it easy to print! Image files are ubiquitous. Everyone knows how to read/write to JPEG/PNG files.

If we want print to be better supported, we must not think of printing as a separate function. Most handheld devices have no concept of printing, for example. We should think of in terms of saving. For example, every application allows you to save your data. If you "save" your file to the printer device, it gets printed out instead!

Website revamp objectives

I hope to achieve these with the new website design:

- Unified CSS design

- Themeable

- Cater to handheld devices

- Still somewhat usable without Javascript

- Still somewhat viewable on older browsers (IE 6)

- Reachable by search engines

My website, version 3

Together with the decision to use JQuery, I will use this opportunity to define a new set of helper JS files, CSS and directory structure.

A brief history:

- Version 1 (<2003): gray background, use HTML editor, not strict HTML

- Version 2 (2003): gray background, use text editor, strict HTML, simple CSS

- Version 2.1 (2007): plain white background, better use of CSS

- Version 3 (2009): to use JQuery to create more dynamic pages

- Version 3.x: to use AJAX/PHP to allow persistent user interaction

I will shift the frequently accessed pages to the new structure first. Other pages will not be touched; in fact they may not be touched at all. I still have very old version 1 webpages lying around.

My website is a mix of the old and the new — very rojak. However, I don't want to touch the old pages unnecessarily.

New tools for my website

I have decided to use JQuery on my website, rather than to use the native DOM functions to manipulate the DOM.

The current version 1.3.2 is 117 kB, 56 kB minified and 20 kB gzipped. The gzipped size is still acceptable.

I will not use JQuery UI for now. The current version 1.7.1 is 298 kB, 188 kB minified and 46 kB gzipped. If I use both, it means every page has a 66 kB overhead!

However, worrying about the size is missing the woods for the trees.

JQuery simplifies the development significantly. It makes webpage manipulation so simple that you only have to think about what to do and don't have to worry about how to do it — for simple self-contained pages.

JQuery has its downsides. It's all too easy to write slow code, because it's so convenient to use. It's easy to forget that almost every call requires a walk through the DOM. In other words, you still need to know how to program efficiently for a real-world app.

Time to switch to a new video format?

I only encode low bitrate videos, for portability reasons. I put them on my HD so that I can watch them on-the-go. It is so much more convenient than to carry the DVDs around.

Although size is important, quality is still important. First and foremost, the video must look good on a small 14" screen. Second, it should be watchable on a 32" widescreen TV. As such, I'm not aiming for ultra-low bitrates (~300kbps) for portable devices, where the screen is 6" or less.

I am using 2-pass Xvid at 600kbps and 64kbps MP3, output to AVI file. Subs are in a separate file. I used to hardcode the subs for convenience, but now I think soft-subs are the way to go.

I am considering using H.264 at 450kbps for video and 32kbps AAC for audio. This will cut down the size by 25%. I am also thinking of outputting to MP4 or MKV so that everything can be put in one file.

(I use these bitrates as my starting point.)

H.264 is an amazing codec. It is so much better than Xvid or other codecs at low bitrates. It also has less visual artifacts because it blurs details instead of showing blocking. The downside is that the decoding is also CPU intensive. (But not anymore, due to better coding and faster CPUs.)

I'm finally able to consider H.264 because the free x264 encoder now allows keyframes to be specified. I need them to cut the video properly after encoding to get rid of unwanted footage, such as the opening and closing. It is more convenient to cut after encoding.

I need to experiment to see if it'll work.

Mobile non-music audio encoding

I like to encode "mobile" videos to put on my HD, so naturally size is important.

Shows are very dialogue-based, so I normally use 64kbps MP3. It is possible to use 32kbps MP3, but it doesn't work all the time. (Maybe I'm not doing it correctly.)

I'm considering switching to another format that will allow me to use 32kbps AAC.

Audio encoding

Audio encoding is so simple compared to video encoding.

Use Exact Audio Copy to extract the raw audio file from the CD, then use LAME to encode to MP3, using the RazorLame frontend. All are free tools. Although the latest EAC allows direct encoding to MP3, I still prefer to do it separately for more control.

And it's fast too. It's not difficult to be 2x faster than real-time.

I use MP3 because I'm used to it. Even though newer formats are more efficient, they are not as widely supported. Also, all formats perform well at decent bitrates (> 128kbps).

I keep my settings simple: 192kbps VBR for normal stuff and 256kbps VBR for long-term storage.

I used to listen to many 128kbps MP3 (*ahem*) and while they sounded good initially, you could feel something missing, especially if you listen to the CD directly afterwards. And this was on low-end computer speakers.

On the other hand, I've listened to 256kbps MP3 on normal hifi speakers and they sounded as good as the CD. 256kbps is known to be CD-equivalent.

I used to encode 256kbps CBR (constant bitrate), but when VBR (variable bitrate) became stable and widespread, I started to use 192kbps VBR.

My philosophy is to encode just once. Storage is cheap and will get cheaper. That's why I stick with 192kbps (at least). For borrowed CDs, I'll definitely use 256kbps VBR.

I don't have a portable music player, but if I do, maybe I'll encode a "mobile" 96kbps AAC (this should be similar to 128kbps MP3), in addition to the normal MP3.

Programming with continuations

The biggest stumbling block to programming in JS is its inability to block. A function must execute quickly, or the browser interface will be blocked. You can't blindly write a while-loop that iterates over many elements.

In JS, if you need a modal dialog box or anything involving user interaction, the follow-on action must be in the callback.

The problem is that the program logic is all over the place. Obviously I haven't found a way to do it properly.

A paradigm shift

My current (traditional) thinking is to use HTML to create a basic input control, CSS to style it and JS to control its behaviour.

However, this does not work well for dynamic webpages. This is because you need to make sure that the HTML and JS are in sync.

It is better to use JS to create everything. The HTML only contains the placeholder to determine the control's location.

This requires a paradigm shift in thinking. There is no more graceful fallback. No JS support means no webpage.